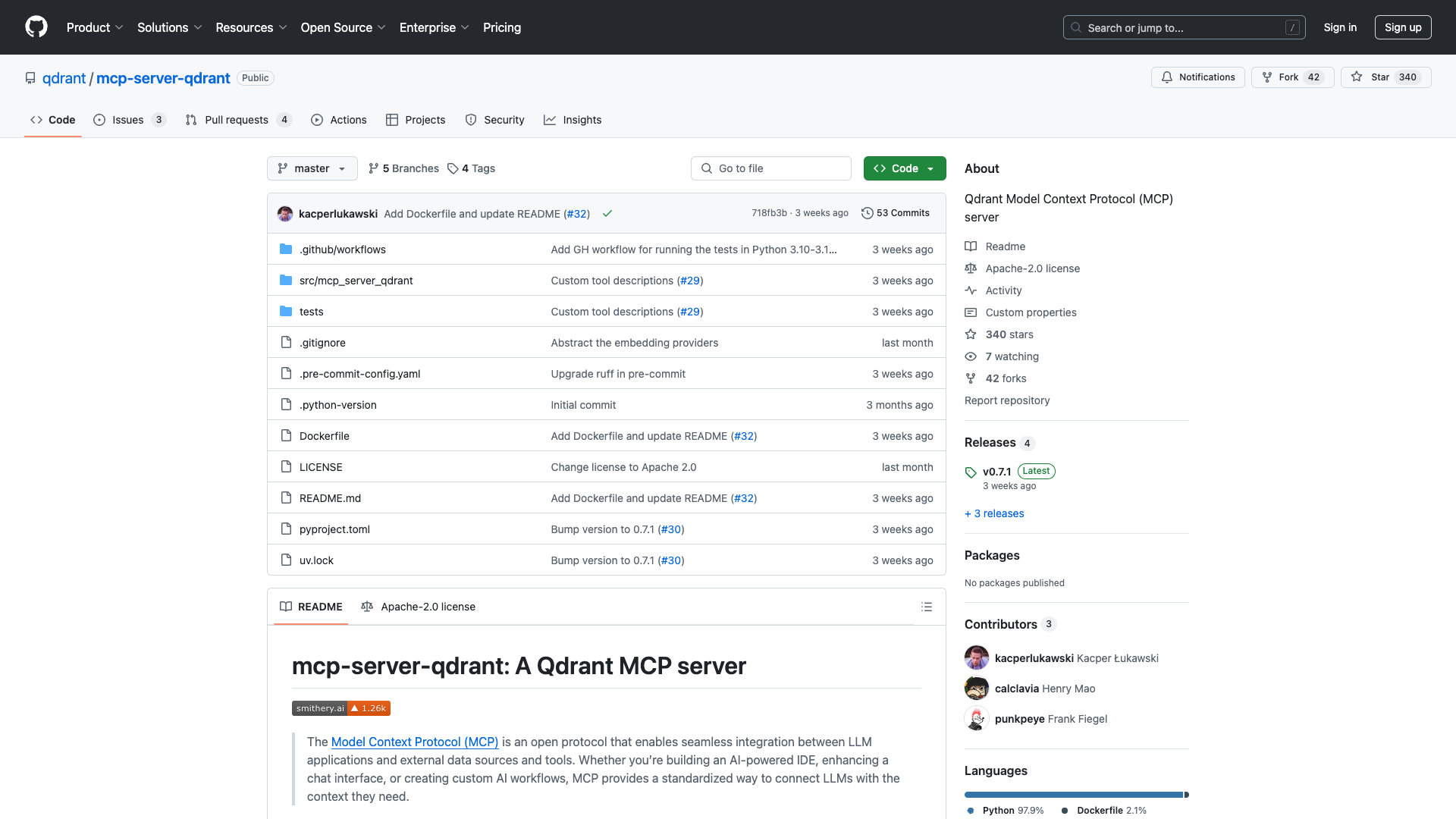

Overview

The Qdrant Model Context Protocol (MCP) server is an open-source implementation aimed at bridging large language models (LLMs) with external databases, enhancing AI-driven applications with contextual information retrieval. Developed by the Qdrant team, this server is tailored for developers and technical professionals who are keen on optimizing their workflows through advanced AI integrations.

Key Features

- Seamless Integration: The MCP server allows for effortless connections between LLMs and external databases using standardized protocols, making it ideal for developers looking to streamline their AI applications.

- Semantic Memory Layer: This feature acts as an intelligent storage layer over the Qdrant vector search engine, enabling efficient management of contextual information, which is crucial for rapid context switching in fast-paced environments.

- Flexible Configuration: Configuration is managed through environment variables instead of command-line arguments, providing users with the flexibility to set up their environment according to their specific needs, whether local or remote.

- Robust Transport Options: The server supports multiple transport methods, including Server-Sent Events (SSE), which is particularly beneficial for cloud-based applications requiring real-time updates.

Installation and Setup

Setting up the mcp-server-qdrant is straightforward. Here’s a brief guide:

- Clone the repository from GitHub:

git clone https://github.com/qdrant/mcp-server-qdrant/

- Ensure Docker is installed for containerized deployment, or install dependencies manually for direct usage.

- Configure your environment variables:

- Set either

QDRANT_URL for a remote Qdrant instance or QDRANT_LOCAL_PATH for local setups.

- Define additional parameters like

COLLECTION_NAME and EMBEDDING_MODEL.

- To run the server directly, use:

uvx mcp-server-qdrant

- For Docker deployment, execute:

docker build -t mcp-server-qdram .

docker run -p 8000:8000 \

-e QDRANT_URL="http://your-url" \

-e COLLECTION_NAME="your-collection" \

mcp-server-qdram

User Experience

Users have reported a largely positive experience with the Qdrant MCP server, particularly appreciating its seamless integration capabilities and the intelligent semantic memory layer. These features significantly enhance productivity by allowing rapid retrieval of relevant data, which is essential in coding environments where context switching is frequent.

However, some users have noted initial confusion regarding the configuration process, suggesting that clearer documentation could benefit newcomers. The reliance on environment variables is a double-edged sword; while it provides flexibility, it may also pose a challenge for those less familiar with such setups.

The support for multiple transport methods, including SSE, has been highlighted as a strong point, especially for developers working in cloud environments. This capability facilitates improved collaboration among geographically dispersed teams.

Community and Support

The Qdrant MCP server is supported by an active community of developers interested in AI applications and memory management solutions. Contributions are encouraged through GitHub issues, fostering a collaborative environment for continuous improvement and innovation.

Conclusion

In summary, the Qdrant MCP server serves as a robust solution for developers looking to enhance their AI applications through effective integration with structured data repositories. While the server offers significant advantages in terms of productivity and flexibility, there is room for improvement in onboarding and documentation to facilitate broader accessibility. Overall, it stands as a promising tool for those eager to push the boundaries of AI technology in their workflows.

License: This project is open-source, and contributions are welcome to further enhance its capabilities.

Open Link