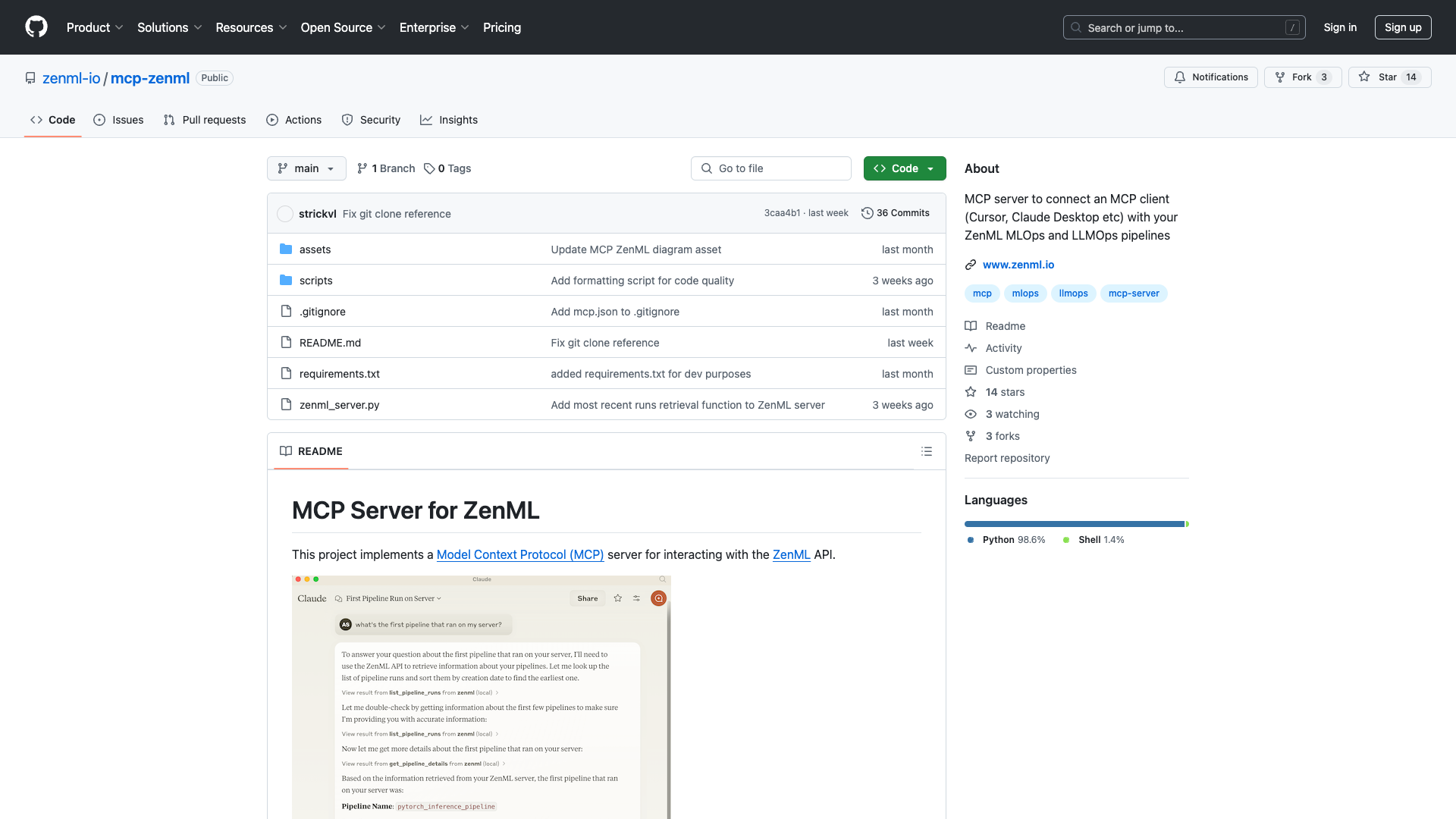

The mcp-zenml project is an innovative Model Context Protocol (MCP) server designed by the ZenML team to facilitate seamless interaction between various MCP clients such as Cursor and Claude Desktop and the robust capabilities of ZenML's MLOps and LLMOps pipelines. This server acts as a universal connector, making it easier for data scientists, machine learning engineers, and software developers to integrate AI into their workflows without extensive code modifications.

Key Features

Seamless Integration:

The mcp-zenml server allows effortless connection between multiple clients and existing ML pipelines, significantly reducing the friction of incorporating new tools into your workflow.

Live Metadata Access:

Users gain real-time insights into critical elements such as pipeline runs and stack components, which aids in informed decision-making during model training processes without constant context-switching.

Trigger New Runs:

This feature enables users to initiate new tasks directly from compatible interfaces, enhancing workflow efficiency by allowing immediate actions based on current observations.

User-Friendly Configuration:

The simplified JSON-based configuration makes setup straightforward, ensuring that both seasoned professionals and those newer to the field can navigate the installation process with ease.

Usability and User Experience

Upon installation, users will find the architecture of the mcp-zenml server intuitive, operating on a client-server model where MCP hosts seek access to data through the protocol. While the documentation is generally helpful, there are moments where more detailed examples would enhance understanding, particularly for newcomers or those looking to explore advanced integrations.

Likes and Dislikes

Pros:

- Efficiency Improvement: The integration capabilities have improved workflows significantly, allowing for smoother operations across various tools.

- Real-Time Insights: Accessing live data alleviates manual tracking burdens, allowing users to focus on more critical tasks.

- Community Engagement: The active Slack community fosters collaboration and provides avenues for feedback, ensuring users feel supported.

Cons:

- Beta Status Frustration: As the project is still in its beta phase, users may encounter occasional bugs that interrupt workflow.

- Limited Documentation Depth: While initial setups are straightforward, a lack of deep dives into advanced functionality can hinder users from maximizing potential usage scenarios.

Company Perspective

ZenML demonstrates a commitment to continuous improvement through active engagement with users, welcoming constructive criticism and feedback. Their reputation in the industry suggests a strong foundation for the mcp-zenml server, and ongoing development is likely to enhance its capabilities further.

Conclusion

In summary, the mcp-zenml server aligns well with the needs of experienced tech professionals seeking to optimize their AI-driven workflows. Despite minor challenges related to its beta status and documentation depth, the server offers a robust solution for integrating AI models with operational frameworks. If you are heavily involved in MLOps contexts and looking to enhance your productivity, the mcp-zenml server is certainly worth exploring.

License Information: The mcp-zenml project is open-source and available on GitHub.

Open Link