Langfuse MCP Server

by LangfuseThe Langfuse MCP server is a specialized tool for managing prompts within AI workflows, designed for developers and teams seeking to optimize their interactions with AI models.

Last updated: 2025-04-01T11:49:17.307464

The Langfuse MCP server is a specialized tool for managing prompts within AI workflows, designed for developers and teams seeking to optimize their interactions with AI models.

Last updated: 2025-04-01T11:49:17.307464

Prompt Discovery: Easily list all available language model prompts using simple API calls combined with optional pagination support.

Specific Prompt Retrieval: Retrieve individual language model prompts transformed into compatible objects ready for immediate use.

Integration Ready: Designed explicitly for seamless integration within environments like Claude Desktop enabling quick deployment.

Community Driven Development: Open-source nature encourages contributions from developers wishing to enhance functionalities or address issues collaboratively.

The Langfuse MCP Server is a powerful implementation of the Model Context Protocol (MCP) tailored specifically for managing prompts in Langfuse. This server is a vital resource for developers, DevOps engineers, and technical professionals looking to streamline their AI workflows through efficient prompt management.

Setting up the Langfuse MCP server is straightforward for users with intermediate technical skills. The process involves cloning the repository, installing dependencies, building files, and configuring settings, all of which are familiar tasks for developers. The clear instructions provided on the website help guide users through this process, although additional resources such as video tutorials could enhance the onboarding experience for newcomers.

The standout feature of the Langfuse MCP server is its prompt discovery functionality. The prompts/list endpoint allows users to quickly retrieve a comprehensive list of available prompts. The optional cursor-based pagination feature prevents users from being overwhelmed by data, reflecting an understanding of usability principles. However, a notable limitation is the lack of detailed descriptions for the arguments accompanying each prompt name, which can lead to confusion about their usage.

The prompts/get functionality allows users to pull specific prompts tailored into MCP-compatible objects, seamlessly integrating with various applications. This flexibility is invaluable, as it opens up numerous possibilities for integrating tools across different environments without extensive adjustments.

Langfuse has cultivated a vibrant open-source community around the MCP server. This commitment to community engagement is crucial for continuous improvement and responsiveness to user feedback. Active participation on GitHub showcases their dedication to enhancing the project and fostering collaborative contributions from developers passionate about advancing machine learning technologies.

While the Langfuse MCP server offers robust capabilities, there are areas that could benefit from improvement:

In summary, the Langfuse MCP server aligns closely with the needs of modern integrations involving artificial intelligence workflows. Its design focuses on efficiency and productivity, making it a valuable tool for developers and technical professionals. While there are areas for enhancement, the server's capabilities and community-driven development reflect a promising future. The blend of utility and developer-friendly operation positions the Langfuse MCP server as a strong contender in the market for prompt management tools, ready to evolve and adapt to the ever-changing demands of the tech landscape.

License Information: This project is open-source and hosted on GitHub, allowing for collaborative contributions and enhancements.

Open Link

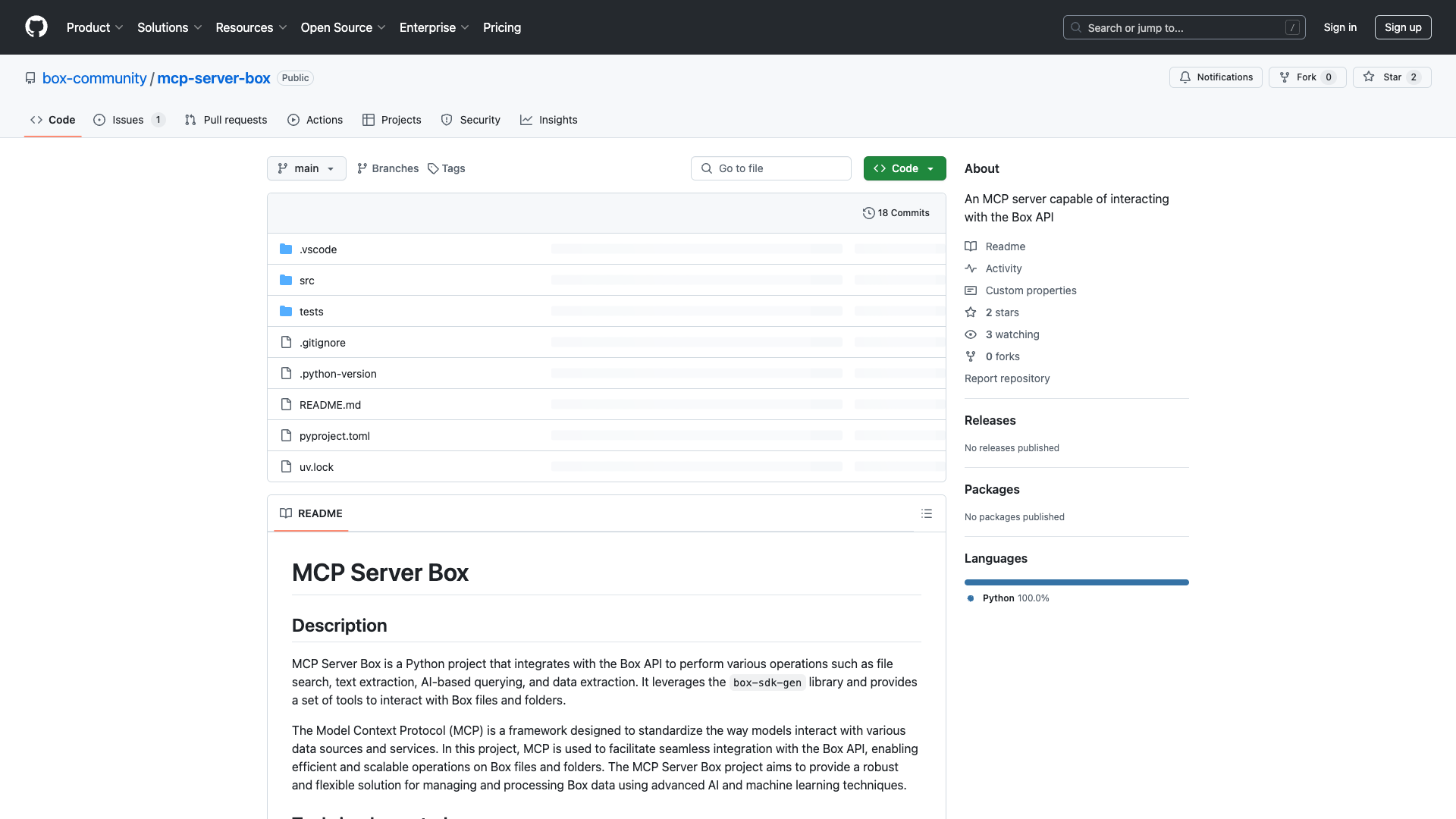

The MCP Server Box is a powerful tool designed for developers looking to enhance their interaction with the Box API, offering advanced features for file management and automation.

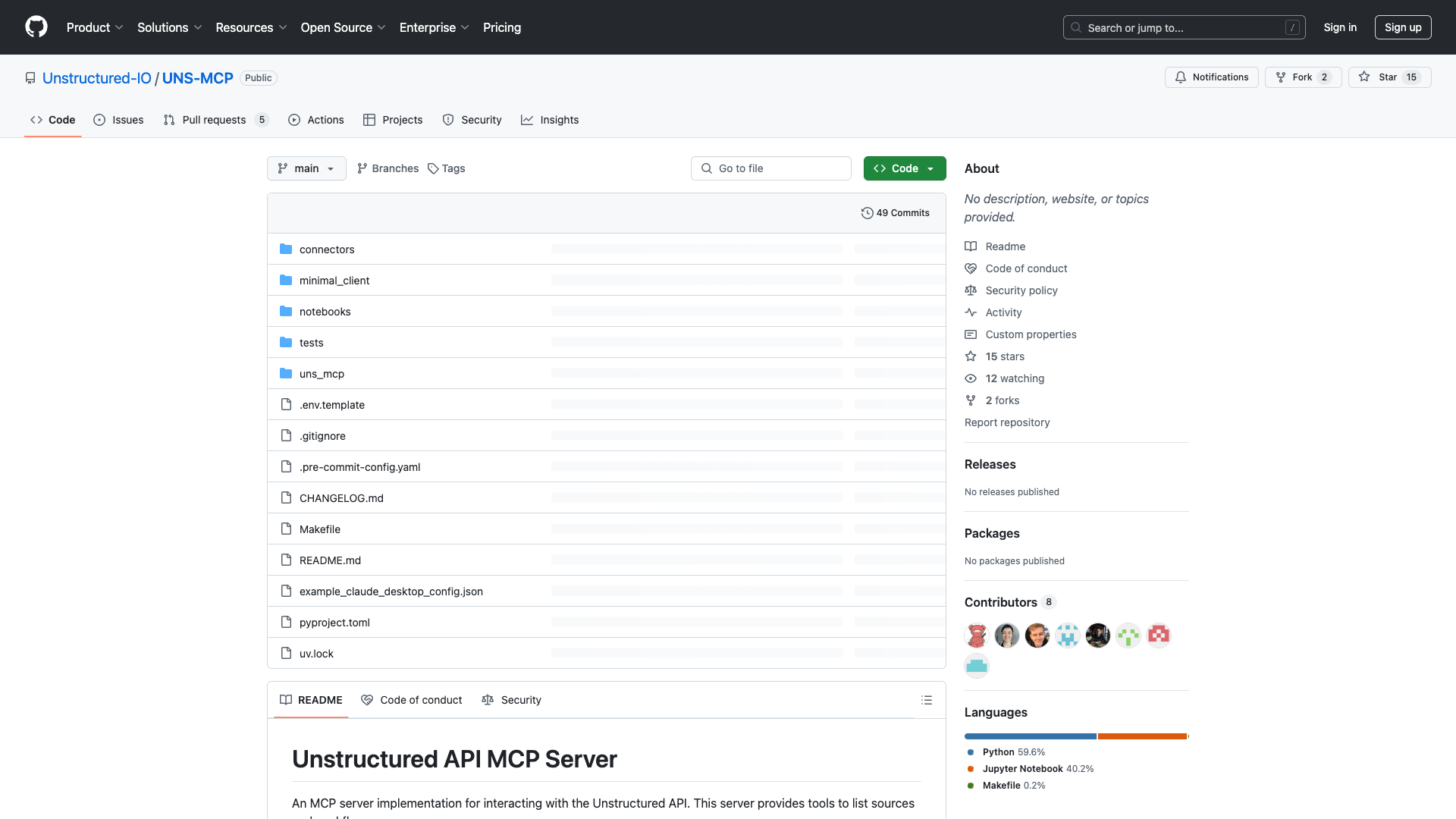

The UNS-MCP server is a powerful tool for developers and data scientists looking to leverage web crawling and LLM-optimized text generation capabilities.

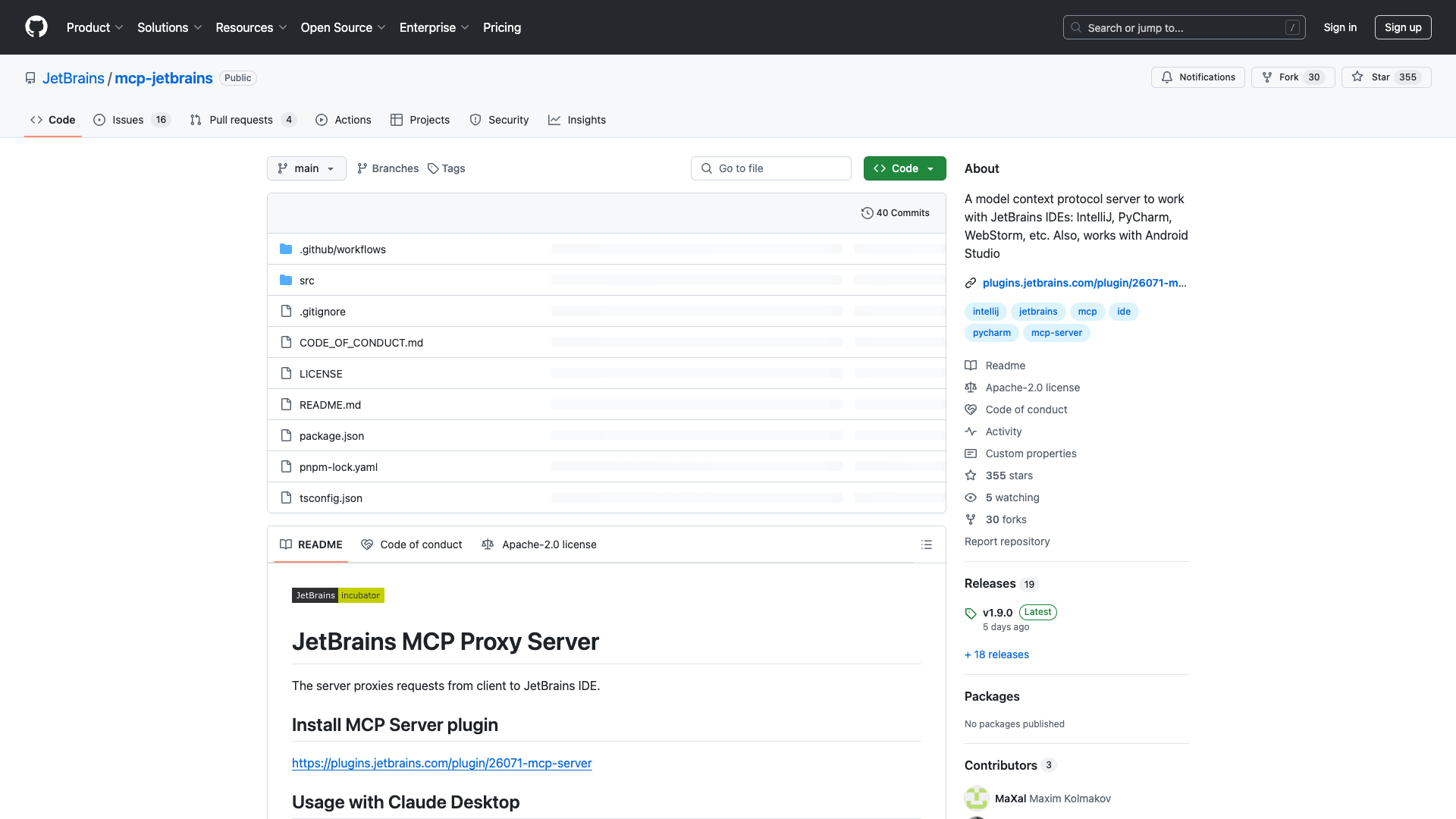

The MCP server enhances productivity for developers by facilitating seamless interactions between JetBrains IDEs like IntelliJ IDEA and PyCharm, allowing for advanced integrations and streamlined workflows.